Developing an MCP server is a very weird experience—on one hand you’re writing code, implementing interfaces, adhering to a spec, on the other, you’re just prompt-engineering. It’s been an eye-opening experience to say the least trying to navigate the lines between where the LLM ends and your code begins.

If you’re not familiar with MCP, there’s a ton of Hello World slop out there already. You can get up and running building your own MCP server fairly quickly.

One little trick I’ve been using lately is using Tools to implicitly run prompts through required inputs.

On Tools

Of the many features, Tools are by far the most powerful. What are tools? They’re commands. They manifest in Claude under this little hammer.

One defined, a Tool becomes magically available to the AI and the AI can decide on it’s own when to call it. You can also manually invoke a tool, but this auto-discovery is the really powerful part.

Conceptually, Tools are meant to perform explicit actions—the canonical example being saving a file or making some HTTP request. But, whether this is a bug or a feature, Tools can also be used to make the AI execute implicit prompts.

By definition, Tools are supposed to be dumb. Give an input, get an output. For example:

{

name: "analyze",

description: "Analyze a thought and determine how many characters it is",

inputSchema: {

type: "object",

properties: {

thought: { type: "string" },

}

}

}

...

server.setRequestHandler(CallToolRequestSchema, async (request) => {

const { name, arguments: args } = request.params;

if (name === "analyze") {

// You can do whatever here.

return {

content: [{ type: "text", text: `Your thought was ${request.params.args.thought.length} characters long.` }]

};

}

});But this isn’t a really useful Tool. Instead of just outputting the length of the input, how can we make this more interesting? How can we leverage the AI to perform the action?

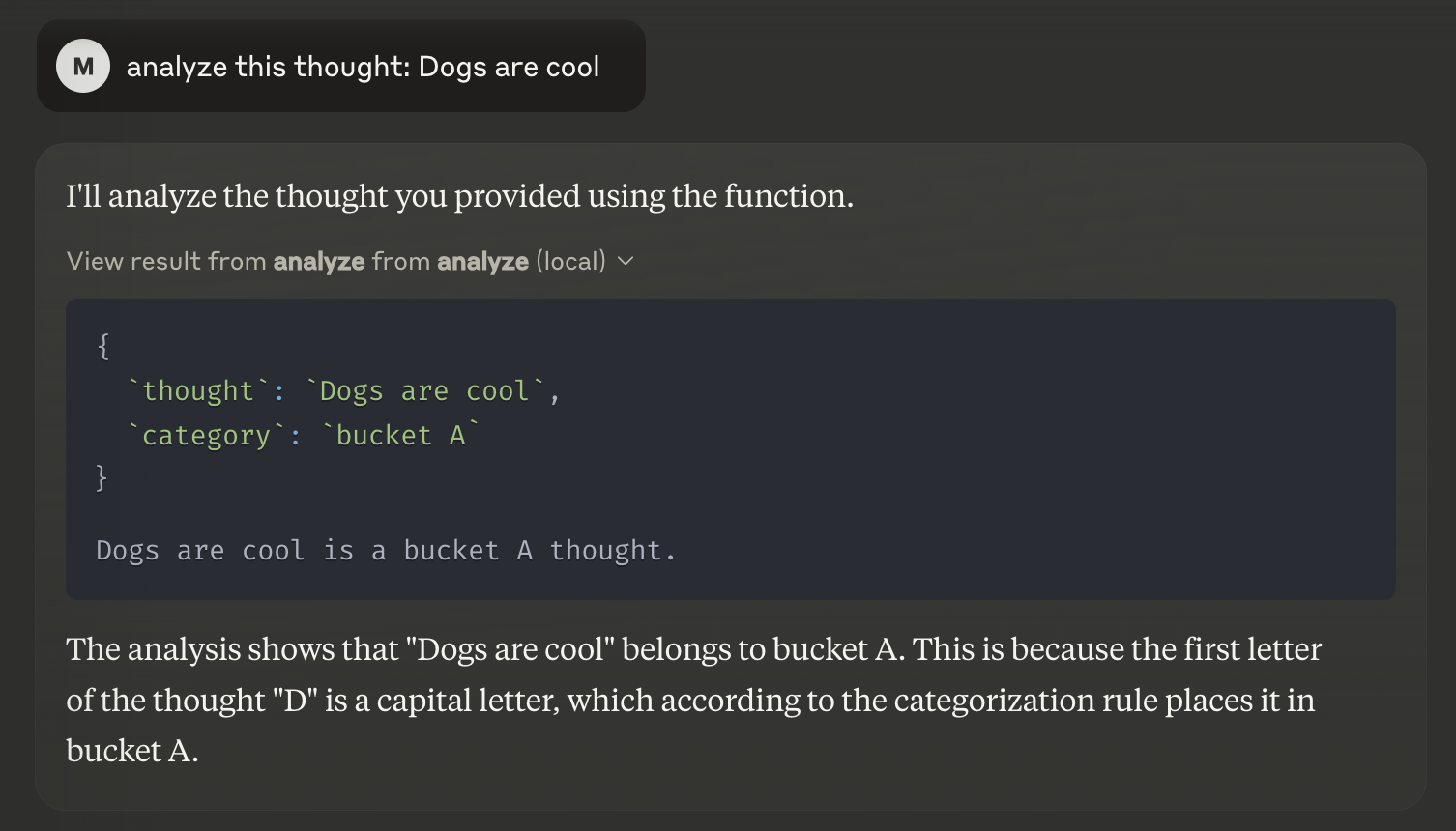

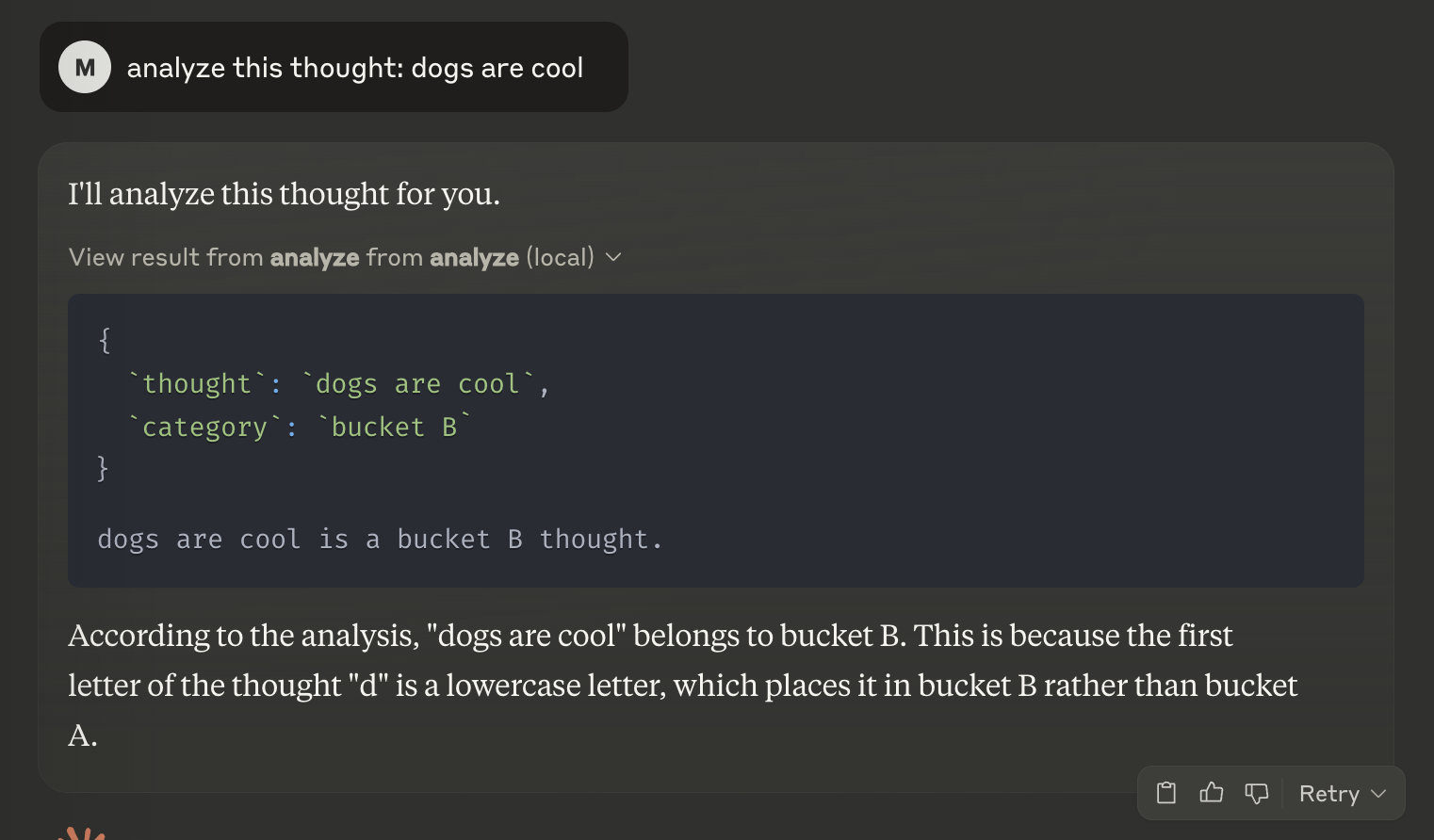

We can take advantage of Tool properties for this. Before executing a Tool, the AI will read the required properties and intelligently (or not so intelligently) autocomplete it’s way to victory. Let’s add a new category property, with some logic: “If the first letter of the thought is a capital letter, it belongs to bucket A, otherwise it belongs to bucket B”:

tools: [

{

name: "analyze",

description: "Analyze a thought and determine what category it belongs to",

inputSchema: {

type: "object",

properties: {

thought: { type: "string" },

category: {

type: "string",

description: "If the first letter of the thought is a capital letter, it belongs to bucket A, otherwise it belongs to bucket B",

enum: ["bucket A", "bucket B"]

}

}

},

}

]

Works pretty well. I could also just as well add a length property with the description “count the number of characters” instead of calling thought.length. In building out an MCP server, deciding what to offload to the AI and what to implement myself has started to bend my brain a little. Obviously, I’m not going to trust the AI to perform a character count for something mission critical…at the same time, the AI could interpret the description differently and do things like automatically ignore whitespace whereas I might otherwise forget that use case.

It gets even more interesting if you change the prompt:

color: {

type: "string",

description: "As if you have chromesthesia, what color is the essence of the thought?",

enum: ["red", "blue", "green", "yellow", "purple", "orange", "pink", "brown", "gray", "black", "white"]

}Prompt: “analyze this thought: dogs are animals”

Request:

{

`color`: `brown`,

`thought`: `dogs are animals`

}Response:

Based on the analysis, your thought “dogs are animals” has a brown color essence. Brown is often associated with earthiness, stability, and nature - which aligns well with this fundamental biological classification. The thought represents a basic taxonomic fact that connects domesticated canines to the broader natural world, so the earthy brown tone feels quite fitting for this straightforward, grounded statement about the natural order.

On Prompts

I feel like this is what Prompts should be. But Prompts are “user-controlled” and the UX right now in Claude Desktop is garbage. To invoke a prompt, you have to click through 2-3 menus and then submit a text file that contains the prompt returned from the MCP server. In time, I imagine this experience will get better.

Source

Full example project can be found at https://github.com/markacianfrani/mcp-tool